New AI Architectures Emerge

The key to the future of life is AI.

As a result, the battle for mindshare and share of the market in AI heats up every day.

Companies are fighting it out — the way you win hearts, minds and territory maps is by having killer tech.

That is why deep inside the skunkworks of Google, Microsoft, Mistral, OpenAI, Anthropic and 200+ other companies sits a Research & Development function.

They are working on ways to increase model performance, output reliability and more - here’s a quick run down of the key areas:

Efficiency Improvements: There's ongoing research to make transformers more efficient in terms of computational resources. Techniques like pruning, quantization, and more efficient attention mechanisms (like linear transformers) are being developed to reduce the computational load and make these models more accessible.

Multimodal Transformers: Transformers that can process and understand multiple types of data (like text, images, and audio) simultaneously have seen significant development. This is especially useful for tasks that require a holistic understanding of different data types, such as generating image captions or multimodal translation.

Domain-Specific Transformers: The development of transformers tailored to specific industries or tasks, such as healthcare, finance, and legal, has been a notable trend. These models are trained on domain-specific data, enabling them to provide more relevant and accurate insights for industry-specific applications.

Explainability and Interpretability: As transformers become more complex, there's an increasing focus on making these models more explainable and interpretable. This involves developing methods to understand how these models make decisions and what factors influence their outputs.

Continual Learning and Adaptability: Efforts are being made to create transformers that can learn continually and adapt to new data without needing to be retrained from scratch. This is crucial for applications where data is continuously evolving.

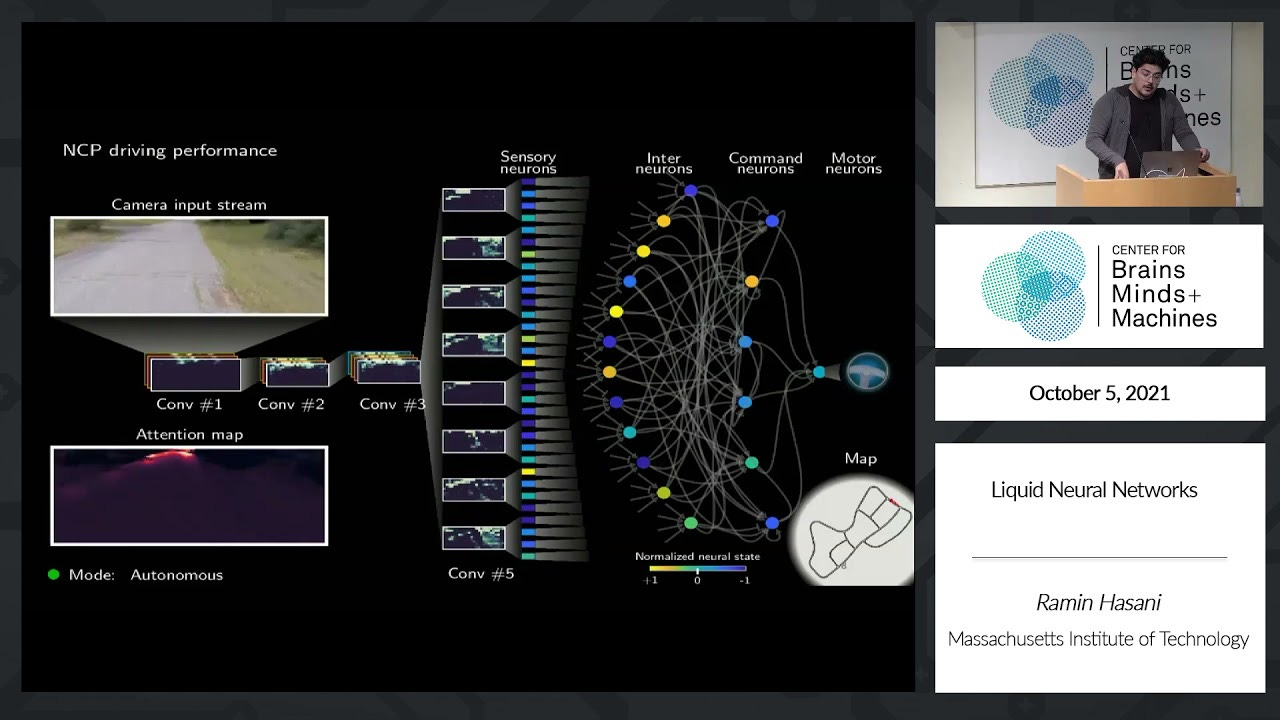

Some are trying new methods: Liquid AI, an MIT spinoff, is an excellent example of a company that is pushing R&D for AI into brand new territory.

This team is developing a novel AI model known as a liquid neural network.

These networks are inspired by the brains of roundworms and are characterized by their small size and reduced computational power requirements.

Unlike traditional AI models, liquid neural networks have fewer parameters and neurons, making them more efficient and interpretable. Their unique feature is the ability to adapt and respond to changing conditions over time, even in scenarios they were not specifically trained for. This technology has shown promising results in tasks like autonomous navigation and analyzing time-fluctuating phenomena. Liquid AI aims to commercialize this technology for various applications.

Another approach is called Model of Experts, or MoE. Incorporating MoE approach can significantly impact their performance, efficiency, and applicability across various domains. This approach essentially involves creating a system where multiple specialized models (the "experts") work together, each contributing its unique strengths to the overall task.

By having different models specialized in various aspects of a problem (e.g., language understanding, sentiment analysis, image recognition), the overall system can handle more complex tasks more effectively.

Each expert can focus on what it does best, leading to improved accuracy and efficiency.

Frankenstein Future

Fusing different models to form entirely new systems with powerful capabilities is a rapidly accelerating field.

One combination that many of the Big Tech Giants are exploring: Transformers with Recurrent Neural Networks (RNNs) — and for very good reasons.

These are the gains they are targeting.

Temporal Sequence Learning: RNNs are particularly good at handling sequences and remembering past information. By integrating RNNs with Transformers, an LLM could potentially improve its ability to understand and generate coherent and contextually relevant text over longer sequences.

Efficiency in Sequential Data Processing: RNNs process data sequentially, which can be more efficient for certain types of tasks where sequence order is crucial. This could complement the Transformer's parallel processing ability, making the combined model more versatile and efficient for a broader range of tasks.

Improved Long-term Dependency Learning: Transformers, while excellent at parallel processing and handling short-to-medium range dependencies, can sometimes struggle with very long-term dependencies due to the fixed-length context window. RNNs can help mitigate this limitation by providing a mechanism to carry information across longer sequences.

Resource Utilization: Combining these architectures might allow for more efficient resource utilization. For instance, the model could use the RNN component for tasks where sequential processing is more crucial, while leveraging the Transformer for tasks requiring parallel processing and attention mechanisms.

Enhanced Learning Dynamics: The learning dynamics of Transformers and RNNs are different. Combining them could potentially lead to a more robust learning process, as each can compensate for the weaknesses of the other.

Flexibility in Model Design: Such a hybrid approach allows for more flexibility in model design, enabling researchers to tailor the architecture to specific tasks or datasets more effectively.

However, there are also challenges associated with such a hybrid approach.

For instance, training and tuning a model that combines these different architectures can be complex.

There might also be issues related to how to effectively integrate the memory and attention mechanisms of both architectures. Despite these challenges, exploring hybrid models like this is a promising direction in AI research and could lead to significant advancements in the field.

Combining state space models with RNNS and transformers could lead to a powerful hybrid triangle architecture that leverages the strengths of each approach.

Leveraging State Space Models: State space models are adept at handling time series data, providing a framework to model dynamic systems where the current state is influenced by both the previous state and some external control inputs. In a machine learning context, this can be useful for tasks that involve predicting future states based on past observations.

Strengths of RNNs: RNNs are particularly good at processing sequences of data, making them suitable for tasks like speech recognition, language modeling, and time series forecasting. They can maintain information across long sequences, which is crucial for understanding context in time-dependent data.

Advantages of Transformers: Transformers excel in handling large sequences of data and can process entire sequences in parallel, which offers significant computational advantages. Their attention mechanism allows them to focus on relevant parts of the input data, making them particularly effective for complex tasks in NLP and beyond.

By combining these three elements, you could create a system that:

Handles Time-Dependent Data Efficiently: The state space model could effectively capture the dynamics of time-dependent data, while the RNN could manage the sequential aspects of the data, maintaining context and temporal relationships.

Enhanced Long-Range Dependencies Handling: Transformers could complement this by efficiently processing longer sequences and identifying long-range dependencies, which is something traditional RNNs struggle with.

Parallel Processing and Scalability: The transformer component would allow for more efficient parallel processing of sequences, improving scalability and speed, especially for large datasets.

Improved Accuracy and Contextual Understanding: The combination could lead to improved accuracy in tasks that require an understanding of complex sequences and contexts, such as predictive maintenance, financial forecasting, or advanced language understanding tasks.

Flexibility and Adaptability: Such a hybrid system could be more adaptable to various types of sequential data and could be fine-tuned to cater to specific requirements of different applications.

In essence, this hybrid approach would aim to combine the best of all three worlds: the temporal modeling capabilities of state space models and RNNs, with the parallel processing and long-range dependency handling of transformers.

It could potentially lead to significant advancements in areas like time series analysis, complex sequence modeling, and sophisticated NLP tasks.

However, designing and training such a hybrid system would be a complex undertaking, requiring careful consideration of how these different components interact and are optimized together.

Unless there is a… more simple approach.

Normalizing Flows = Time Traveling Transformers?

Have you seen the movie Tenet where they invert time and use it as a weapon?

Transformers can be inverted, too.

Called Normalized Flows, these models learn a series of invertible transformations that map a simple distribution (e.g., Gaussian) onto the desired target distribution of the data.

They offer flexibility and good control over the generated samples.

That was a lot… here’s a though experiment with colorful balls to paint the picture.

Imagine a playground slide:

Top of the slide: This represents the starting point of your data, like a pile of colorful balls.

Bottom of the slide: This is the final output you want to generate, like a specific arrangement of the balls.

Slide itself: This is the normalizing flow model.

Just like a slide transforms the position of the balls from the top to the bottom, the normalizing flow model transforms your data into a desired output.

Here's how it works:

Start with a simple distribution: Think of a flat, horizontal line representing all possible positions of the balls at the top of the slide. This is like a simple mathematical distribution, like a bell curve.

Apply transformations: Now, imagine the slide is made of different sections, each with a unique twist or turn. These twists and turns represent mathematical operations applied by the model. Each section pushes and pulls the balls in different ways, changing their positions and spreading them out.

End with the desired distribution: As the balls reach the bottom, they are no longer evenly spread. Instead, they form a specific pattern or arrangement, representing the desired output distribution.

Key point: The model learns the specific twists and turns of the slide (the mathematical operations) by observing many examples of the desired output. It then uses this knowledge to transform new data into similar patterns.

Benefits of Normalizing Flows:

Flexibility: You can design different twists and turns to create various output distributions, just like building a slide with different shapes.

Control: You have more control over the generated data compared to other methods, like fine-tuning the turns to achieve a specific pattern.

Interpretability: Each twist and turn is a mathematical operation, making the model easier to understand and analyze.

Overall, normalizing flows are like powerful, customizable slides that can transform your data into desired shapes and patterns.

Very computationally expensive slides to build… but the cost of compute is on a curve like everything else.

The future of artificial intelligence promises to be an exciting frontier filled with tremendous potential, yet also requiring thoughtful consideration around responsible implementation. As the Transformer architecture and its large language models continue to achieve remarkable capabilities, how this technology develops in the years ahead will have profound impacts on individuals and society as a whole.

Inside the R&D labs of major tech companies and innovative startups, the race is on to push AI capabilities even further through enhancements like increased efficiency, multimodal understanding, domain specialization, explainability, and adaptability to changing conditions.

Creative new architectures are also emerging, like Liquid AI’s liquid neural networks which adapt to fluctuating scenarios. Hybrid approaches aim to combine the strengths of Transformers, RNNs, and other model paradigms into superior systems. Models of experts allow multiple specialized AI modules to collaborate on complex tasks. This modular, ensemble approach highlights the trend toward AI that can dynamically reconfigure itself to suit different needs.

As the building blocks of AI systems grow more advanced, researchers are finding novel ways to combine them, much like forming powerful multi-disciplinary innovation teams. State space models, RNNs and Transformers could fuse into architectures ideally suited for processing contextual, time-dependent data with long-range dependencies. Normalizing flows offer a way to gain precision control over model outputs.

As we stand at the cusp of these exciting developments, it's evident that the future of AI is not just about technological breakthroughs but also about how these innovations can be leveraged to create more intelligent, efficient, and intuitive systems.

The AI landscape is evolving into a tapestry of diverse approaches, each contributing to a broader understanding and application of artificial intelligence. Whether it's through enhancing existing models or pioneering new ones, the relentless pursuit of AI excellence is a journey that promises to redefine our world in unimaginable ways.

In essence, the future of AI is a narrative of convergence and innovation, where the melding of different technologies and ideas paves the way for a new era of intelligent systems.

This journey, fueled by relentless research and development, is not just about achieving technological supremacy but about unlocking the full potential of AI to create a smarter, more connected, and more intuitive world.

As we continue to push the boundaries of what's possible, the promise of AI remains a beacon of progress, innovation, and endless possibilities for the future.