Hatching the Dragon: Why This New AI Architecture Will Change How We Build

As an investor who rolls up my sleeves, my job is to create value.

I find the most powerful new technologies and build systems that make my portfolio companies faster, smarter, and more valuable. For the last five years, that has meant one thing: Transformers. We all build on them, but we also know their secrets. They are opaque, unpredictable, and astronomically expensive “black boxes”. We can’t engineer them. We can only tame them. When they fail on complex reasoning tasks, we have no idea why.

That’s why the “Dragon Hatchling” paper from Pathway is so critical. It’s not just another incremental tweak. It’s a potential new blueprint for AI. One that is built like our brain, with thoughts we can trace and understand.

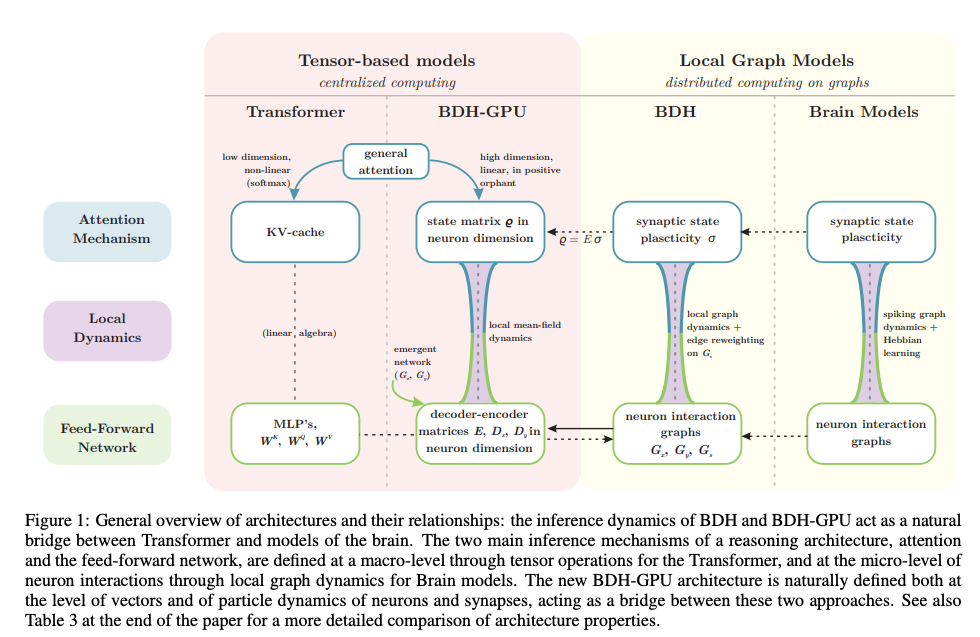

The core problem the researchers tackle is that Transformers, for all their power, don’t “reason” or generalize over time like the human brain. The brain is a “uniform, scale-free” graph while the Transformer is a stack of dense, identical blocks. For the non-mathematically inclined, a graph is just a mathematical way to describe a network:

Nodes: These are the individual processing units. In the brain, a node could be a single neuron or a larger brain region.

Edges: These are the connections between the nodes (synapses or large-scale nerve tracts).

So, saying “the brain is a graph” is just a way of saying it’s a vast, interconnected network.

While the brain has many different types of neurons, at a fundamental level, they are all relatively “uniform.” They are all basic processing units that work in a similar way: they receive signals, “decide” whether to fire, and send signals to other neurons.

Think of it like LEGOs. You might have different colors and sizes (different neuron types), but they are all fundamentally the same kind of building block. This uniformity is what allows the brain to be so flexible, using the same basic parts to handle vision, sound, thought, and motion.

A scale-free network is one where the vast majority of nodes have only a few connections, but a small handful of nodes known as “hubs”are extremely connected, linking to thousands or millions of other nodes.

You see scale-free networks everywhere. Think of the internet. Most personal websites have very few links pointing to them. But “hubs” like Google or Wikipedia have billions of links.

Brains have genius design. Their hubs allow for very short “paths” between any two neurons. A signal can hop from a local neuron, to a hub, and back to another neuron in a totally different part of the brain very quickly. This makes the brain incredibly efficient at processing information. This structure is also very resilient. Randomly deleting a few “normal” nodes won’t crash the system, as they have few connections. The brain is a marvel of engineering.

The Pathway team asked: What if we built an AI that actually mimics the brain’s structure and learning process?

Their answer is BDH, a new model built as a “scale-free biologically inspired network” of “neuron particles”. Instead of just using dense matrix math, it models a graph of neurons that interact locally.

Its memory isn’t a temporary cache, it’s more akin to synaptic plasticity, just like in our heads. It learns using the classic “neurons that fire together, wire together” in the same way we level-up.

Here’s the part that should make every builder and investor sit up: it works.

BDH rivals the performance of GPT-2-architecture Transformers at the same parameter counts, from 10M to 1B.

But unlike a Transformer, it’s not a black box. The architecture is interpretable.

The authors found “monosemantic synapses” which are individual connections that strengthen only when the model hears or reasons about a specific concept, like a given currency or country.

The core contribution is this: BDH is the missing link that gives us the raw performance of Transformers with the structured, interpretable, and efficient design of the brain.

For us, this signals a new era. It’s a path to AI that is not just powerful, but also debuggable, reliable, and (as a stunning experiment in the paper shows) composable.

We’ve geeked out SUPER HARD about how important composability is before:

Now it appears to be here for AI.

We may finally be able to engineer intelligence, not just summon it from stochastic mathematics.

The Breakthrough

For years, our entire industry has been in an arms race built on one chassis: the Transformer. We’ve been scaling it up, feeding it more data, and throwing unfathomable compute at it, all while hoping its fundamental flaws would just solve themselves.

They haven’t.

Most of us are familiar with the two “walls” we keep hitting.

The first is the black box wall. When a Transformer hallucinates or fails at a complex, long-context reasoning task, our only recourse is to shrug, tweak the prompt, or spend millions on more training data.

We can’t debug it because the “reasoning” is smeared across billions of opaque parameters. No matter what a supposed chain of thought is showing you, you are not seeing the thinking. This is a non-starter for any mission-critical system in finance, medicine, or autonomous vehicles. The second is the efficiency wall. The Transformer’s core “attention” mechanism scales quadratically with context length, a brutal bottleneck that costs us a fortune in memory and compute.

The BDH architecture isn’t a patch; it’s a new foundation. The innovation is to stop treating AI as abstract matrix math and start treating it as a graph-based distributed system, just like the brain.

The true breakthrough is how BDH re-imagines “attention” and “memory.”